Duplicity – quick and easy backup

Many server administrators have to face the problem of backing up their files when creating their architecture. This is a very important activity, because if something goes wrong, you can quickly restore your system to its pre-crash state with such files. The simplest tools for making basic backups are tar, gzip and lftp. The first application creates a package with the required files, the second one compresses it, and the third one sends to an external server, designed for such copies. Of course, some people can also add data encryption within maximum security.

However, after some time, it turns out that these backups are becoming larger and larger, so they take up a lot of space, take a long time and send too much time to other machines. What can we do about it? Look for a tool that will enable us to create incremental backups.

And here appears Duplicity. It is an application for backing up selected directories in the form of encrypted files and then saving them in some location (both remote and local). It uses the rsync library, which allows you to easily create incremental archives that store only the changed data of some files. Archives can be encrypted and transmitted remotely to another server, using protocols such as: FTP, IMAP, RSYNC, S3, SSH/SCP, WEBDAV and many others.

Spis treści

Duplicity commands

Duplicity instalation

To download and install Duplicity we will need the EPEL repository:

yum install epel-releaseNext, issue the following command:

yum install duplicityAfter a while the application will be ready to use in the system.

Full backup

An example of a set of commands to perform all activities locally.

duplicity full --no-encryption [path to the directory to make a copy] file://[path to where to upload the backup file]Makes a full backup, without encryption of the archive.

Full backup with conditions

duplicity --full-if-older-than [ilość dni]D --no-encryption [path to the directory or file to make a copy] file://[path to where to upload the backup file]Make a full backup, without encryption, if the last one is older than the specified number of days.

Incremental copy

duplicity --no-encryption [path to the directory or file to make a copy] file://[path to where to upload the backup file]It makes individual backups to us, without encryption.

Deleting full backups

duplicity remove-all-but-n-full [liczba] --no-encryption --force file://[backup path]Deletes all full backups, except for the last ones entered as a number. Entering a value of 1 will delete all full backups, except for the latest one.

Removing incremental copies

duplicity remove-all-inc-of-but-n-full [liczba] --no-encryption --force file://[backup path]Deletes all incremental backups assigned to full backups. The parameter [number] indicates the number of full backups. The –force option is required to delete files instead of printing them on the screen.

Restore the entire backup

duplicity -t [ilość dni, np. 5D] --force --no-encryption file://[backup path] [path to where to unpack the backup]Extracting a specific file from the backup

duplicity -t [ilość dni, np. 1D] --force --no-encryption --file-to-restore [file or directory] file://[backup path] [path to where to unpack the backup]Restores a specific file from the archive, instead of the entire backup. With archives exceeding a few gigabytes, it saves us a lot of time.

Deleting files with a period greater than days or months

duplicity remove-older-than [enter days or months, e.g. 15D, 1M] file://[backup path]Listing files from the archive where the backup is located

duplicity list-current-files [opcje] file:///[backup path]This is a very useful option if you only want to extract specific files or directories. You can also use the grep switch and search for a particular phrase or throw the result of a command into a file.

Output of backup and related information

duplicity list-current-files [opcje] file:///[path to the backup directory]Performance tests

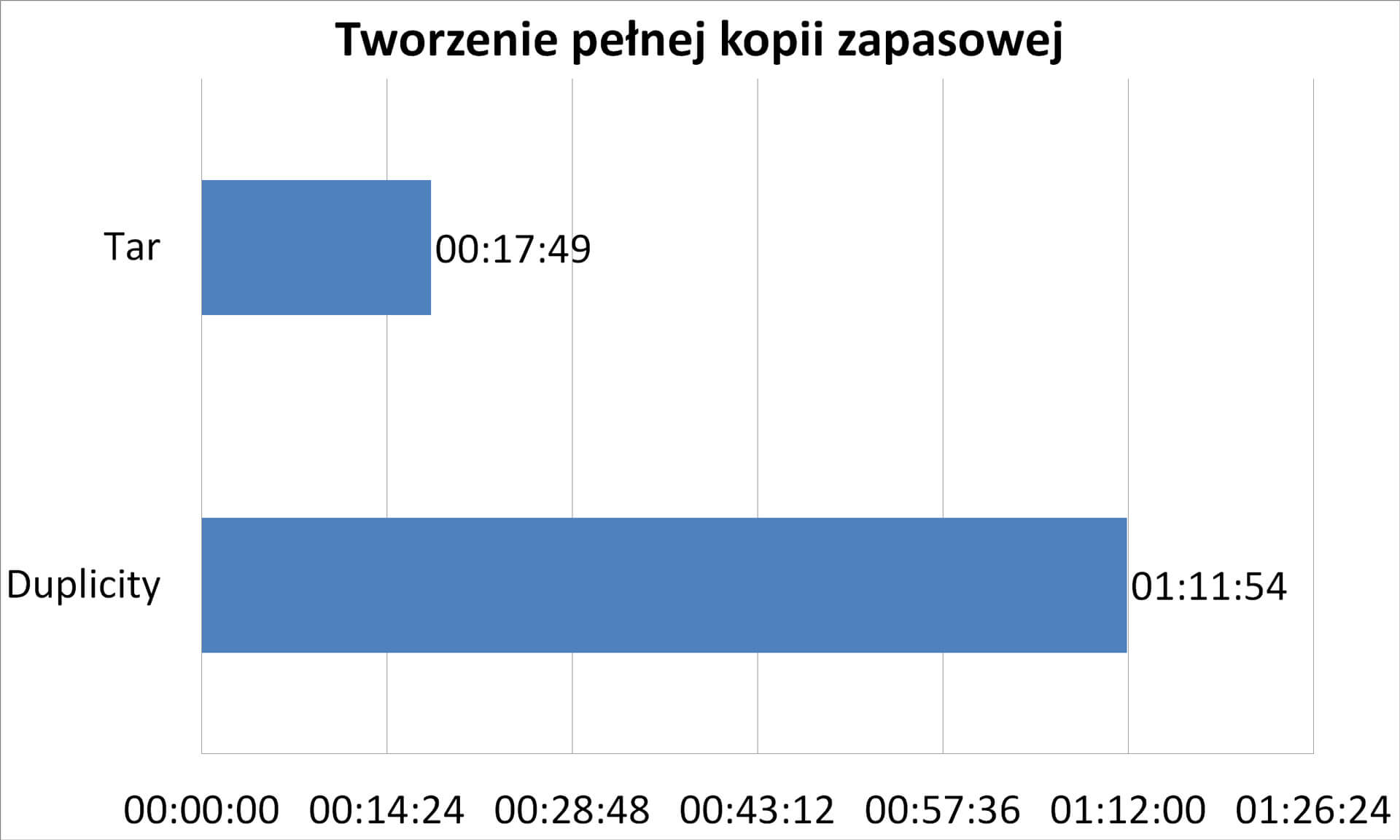

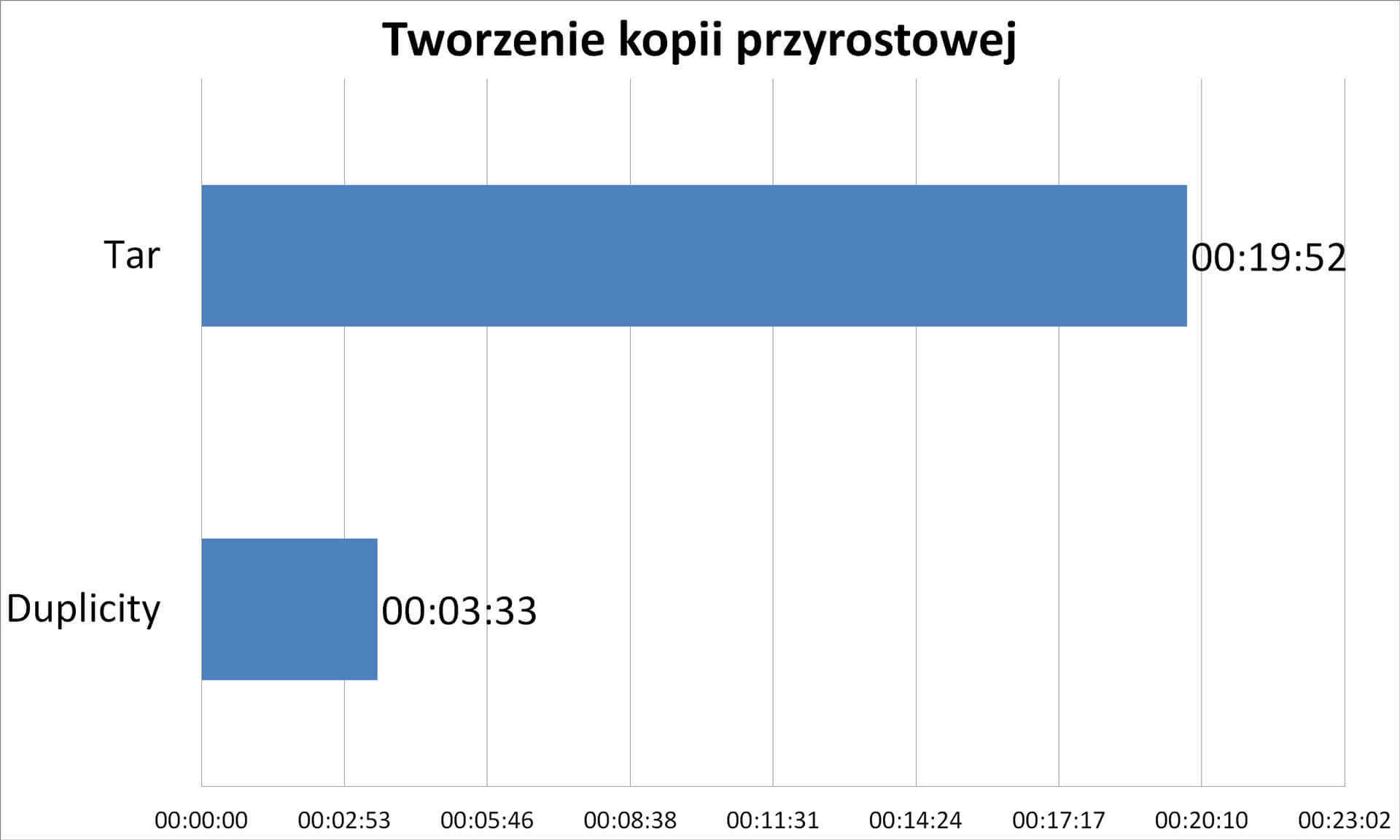

To illustrate the differences between duplicate backup and Tara we did some tests. We created a directory with 50 GB of randomly generated files. Then we made a full backup with our tool and Tarem. Then we created an additional 3 GB of files. Duplicity we made an incremental backup and Tarem a standard backup.

As we can see on the chart, Duplicity only needed 3 minutes to check what had changed and back up the differences. Tar had to do everything.

Summary

Advantages

- Good project documentation

- Simple syntax

- Incremental backup speed

- Saving the Internet connection

- Easy backup management

- Extracting specific files from the archive very quickly

Disadvantages

- Long full backup time

- Damage to files from incremental copy, makes it impossible to recover data from that date.

Check other blog posts

See all blog posts

6 April 2022

Why is it a good idea to split sites across different hosting accounts?

Read moreMultiple websites on one server is a threat that can have different faces. Find out what the most important ones are and see why you should split your sites on different hosting accounts. Powiązane wpisy: Compendium: how to secure your WordPress? Everything you have to keep in mind when creating an online store Useful plugins…

13 September 2021

How do you get your website ready for Black Friday or more traffic?

Read moreToo much website traffic can be as disastrous as no traffic at all. A traffic disaster results in server overload. In such a situation, no one is able to use e.g. your online store’s offer, and you do not earn. Learn how to optimally prepare your website for increased traffic. Powiązane wpisy: Compendium: how to…

10 September 2021

Password management or how not to lose your data

Read moreDo you have a bank account? Use the internet with your smartphone? Congratulations! Then you are on the brighter side of the power, where digital exclusion does not reach. But can you take care of the security of your data as effectively as you invite your friend for a beer via instant messenger? Powiązane wpisy:…